SVD#

Let’s break down Singular Value Decomposition (SVD) with SageMath to make it both intuitive and practical.

Conceptual Understanding of SVD

Think of SVD as a way to “factorize” a matrix into three simpler matrices. Just like you can factorize a number into primes, SVD decomposes a matrix into:

Singular Values (Σ): These are non-negative numbers, representing the “magnitude” or “importance” of each dimension in your data. They’re arranged in decreasing order, so the largest singular value often captures the most significant feature in your matrix.

Left Singular Vectors (U): These are orthonormal (perpendicular and unit length) vectors that span the column space of your original matrix. They tell you about the “directions” of the most important features.

Right Singular Vectors (V): Also orthonormal, these vectors span the row space of your matrix. They give you information about how your original data points (rows) relate to the important features.

The key equation:

A = UΣVᵀ

(where A is your original matrix)

Geometric Intuition

Imagine your matrix represents data points in a high-dimensional space. SVD helps you:

Find the principal axes (directions of greatest variance) in this space – these are given by the singular vectors.

Stretch or compress the space along these axes based on the singular values.

Rotate the axes to align with the original coordinate system.

SageMath Examples

Let’s use SageMath to illustrate these concepts.

import numpy as np

from sage.all import *

# Create a random matrix (you can use your own data here)

A = random_matrix(RDF, 4, 3)

print("Matrix A:\n")

show(A.n(digits=2))

# Compute the SVD

U, S, V = A.SVD()

print("\nLeft Singular Vectors (U):\n")

show(U.n(digits=2))

print("\nSingular Values (S) (diagonal of Σ):\n")

show(S.n(digits=2))

print("\nRight Singular Vectors (V):\n")

show(V.n(digits=2))

Matrix A:

Left Singular Vectors (U):

Singular Values (S) (diagonal of Σ):

Right Singular Vectors (V):

Explanation of the Code

The code begins by importing necessary libraries:

numpyfor numerical operations andsage.allfor SageMath functionality.It generates a random matrix

Awith dimensions 4x3 using therandom_matrixfunction from SageMath’sRDF(real double field).The singular value decomposition (SVD) of the matrix

Ais computed using theSVD()method.The results of the SVD are stored in the variables

U,S, andV, representing the left singular vectors, singular values, and right singular vectors, respectively.The script prints the original matrix

A, followed by the left singular vectorsU, singular valuesS(as the diagonal elements of the diagonal matrix Σ), and right singular vectorsV.

Applications of SVD

Dimensionality Reduction: Keep only the top k singular values/vectors to approximate your data with fewer dimensions (Principal Component Analysis – PCA).

k = 2

A_k = U[:, :k] * S[:k, :k] * V[:k, :]

print("\nReduced Matrix (k=2):\n")

show(A_k.n(digits=2))

Reduced Matrix (k=2):

Image Compression: Store only the largest singular values and corresponding vectors to compress an image.

Recommender Systems: Use SVD to factorize user-item ratings matrices, filling in missing ratings.

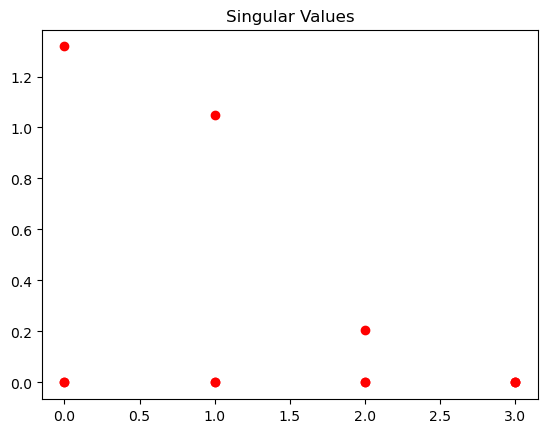

SageMath Visualization

import matplotlib.pyplot as plt

# Plot singular values

plt.plot(S, 'ro')

plt.title('Singular Values')

plt.show()

This visualization shows you a simple line plot where:

The x-axis represents the index of each singular value (e.g., 1, 2, 3…).

The y-axis represents the magnitude of each singular value.

Interpreting the Plot

The plot helps you visualize the following:

Relative Importance: The height of each red circle indicates the importance or “strength” of the corresponding feature or dimension captured by the singular value. Higher circles mean more significant features.

Decay: Typically, singular values decay rapidly. This means the first few singular values are much larger than the later ones, suggesting that most of the information in your original matrix is captured by just a few dimensions.

Why Visualize Singular Values?

This visualization is helpful for:

Dimensionality Reduction: You can visually decide how many singular values (and their corresponding vectors) to keep for dimensionality reduction techniques like PCA.

Understanding Data Structure: The plot gives you insight into the underlying structure of your data – how many dominant features there are and how quickly the importance of features diminishes.

Understanding Principal Component Analysis#

PCA is a powerful technique for dimensionality reduction. It simplifies complex datasets by transforming them into a new set of variables, called principal components (PCs). These PCs are ordered by the amount of variance they explain in the original data.

Goal:

To find the directions (principal components) along which our data varies the most.

To reduce the number of variables while retaining as much information as possible.

Key Ideas:

Variance: PCA aims to maximize the variance captured by each PC.

Orthogonality: PCs are uncorrelated (orthogonal) to each other.

Dimensionality Reduction: We can often represent the data well using only a few PCs.

SageMath Example and Code

import numpy as np

from sage.plot.scatter_plot import scatter_plot

from sage.plot.point import point

# Generate some sample data

np.random.seed(42)

mean = [int(2), int(3)]

cov = [[int(2), int(1)], [int(1), int(3)]]

data = np.random.multivariate_normal(mean, cov, int(100))

# Plot the original data

scatter = point(data.tolist(), color='blue')

# Center the data:

# Subtracts the mean of each feature (column) from the data,

# effectively shifting the data's center to the origin.

centered_data = data - np.mean(data, axis=0)

# Compute the covariance matrix

# This matrix describes how the different features vary together

cov_matrix = np.cov(centered_data, rowvar=False)

# Compute the eigenvalues and eigenvectors

# Eigenvalues: Represent the amount of variance

# explained by each principal component.

# Eigenvectors: Define the directions of the principal

# components.

eigenvalues, eigenvectors = np.linalg.eig(cov_matrix)

# Sort eigenvalues and eigenvectors in descending order

# This ensures that the first principal component captures

# the most variance.

idx = eigenvalues.argsort()[::-1]

eigenvalues = eigenvalues[idx]

eigenvectors = eigenvectors[:, idx]

# Projects the centered data onto the principal components by

# multiplying it with the eigenvectors. This transformation

# finds the coordinates of the data points in the new space

# defined by the principal components.

projected_data = centered_data @ eigenvectors

# Plot the principal components

origin = [0, 0, 0]

pc1 = eigenvectors[:, 0]

pc2 = eigenvectors[:, 1]

scatter += point(origin + list(pc1), color='red', size=50) # PC1

scatter += point(origin + list(pc2), color='green', size=50) # PC2

# Plot the projected data

for point_data in projected_data.tolist():

scatter += point(point_data, color='pink')

# Show the plot

scatter.show(aspect_ratio=1)

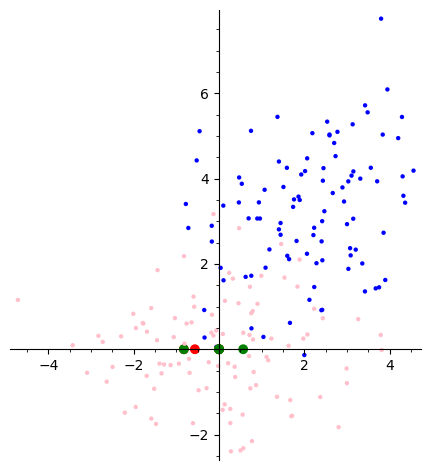

Let’s break down this code step by step and understand the visualization it produces.

Blue Dots: Original data points, potentially showing some correlation between the two features.

Red and Green Points (PC1 and PC2): These points represent the endpoints of unit vectors along the first (PC1) and second (PC2) principal components. The lines connecting these points to the origin show the directions of maximum variance in the data.

Pink Dots: Data points projected onto the principal components. This shows how the original data is distributed along the new axes defined by PC1 and PC2.

Key Idea of PCA

Principal Component Analysis (PCA) is a dimensionality reduction technique. It finds new axes (principal components) that maximize the variance of the data when projected onto them. In this case:

PC1: Captures the most variance in the data, meaning it’s the direction along which the data points are most spread out.

PC2: Captures the second most variance, and is orthogonal (perpendicular) to PC1.

By transforming the data onto these new axes, PCA can help visualize and analyze high-dimensional data more easily, often revealing underlying patterns or relationships.